Ever wonder why one ad grabs attention while another gets ignored? There’s a reason.

A/B testing is a method used to compare two versions of a marketing asset to determine which one performs better. Instead of relying on intuition, A/B testing lets you test a single change at a time—such as a subject line in a marketing email, the color scheme of a landing page, or the font on a billboard—to find out which one drives better results.

The goal?

More conversions, smarter marketing, and a stronger connection with your audience.

But it’s not just about numbers. Your business still needs to make sure every ad resonates with the right people in the right way. This guide breaks down how to run a successful A/B testing campaign and why it matters for your business.

A/B Testing Definition

A/B testing—also known as “split testing” or “bucket testing”—is a method that compares two versions of a marketing asset to discover which is more effective. It tests a control version (A) against a variant version (B) to measure which one garners more engagement, conversions, or meets other key performance indicators (KPIs).

While it might seem like a digital marketing invention, A/B testing has been around for decades before computers. Prior to the internet, businesses used a form of controlled testing in direct mail campaigns to compare different headlines and designs. While not as precise as modern real-time data analysis, these early tests provided valuable customer insights.

Today, brands use A/B testing across display ads, emails, social media videos, and even digital out-of-home (DOOH) ads to optimize campaigns faster and more precisely.

Why is A/B Testing Important?

No marketing strategy is without its flaws. What works for one brand—or even one campaign—might fall flat for another.

A/B testing helps businesses eliminate guesswork and refine campaigns using actual data. Instead of wondering why an ad or email isn’t converting, businesses can test different elements and pinpoint what works. By running A/B tests, businesses can make meaningful, data-driven decisions.

Maximizes Marketing Return on Investment (ROI)

No one wants to waste money on campaigns that don’t deliver. A/B testing ensures that your ad spend is focused on high-performing content. If one version of an ad drives more conversions (after you’ve tested multiple times), that’s where your budget should go. Instead of relying on guesswork, you’re making data-backed decisions that maximize your return on investment.

Boosts Conversion Rates

Every business wants more customers, but getting them to take action is the tricky part. Whether it’s making a purchase, filling out a form, or signing up for a newsletter, small changes can have a big impact. Something as simple as changing a call-to-action (CTA) from “Sign Up Now” to “Start Your Free Trial” can lead to a noticeable increase in conversions.

Improves User Experience

A bad user experience can drive customers away before they even get a chance to convert. Remember: visitors come to your website with a goal, which could be learning about your product, making a purchase, or just browsing. If they run into confusing copy, a hard-to-find CTA button, or any other issue, that frustration can quickly cost you conversions.

A/B testing helps businesses figure out what’s working and what’s getting in the way. Sometimes, a small tweak—like changing a single word in your ad or adjusting a landing page’s background color—can make all the difference.

What Can You A/B Test in Marketing?

Almost anything that customers interact with can be tested. Some of the most common areas include:

- Website & Landing Pages – Headlines, CTA buttons, images, navigation menus, page layout

- Digital Ads – Headlines, images, ad copy, promotional offers, post formats, captions, video thumbnails, engagement strategies

- Email & Messaging – Subject lines, email length, CTA placement, personalized vs. generic messaging, push notifications

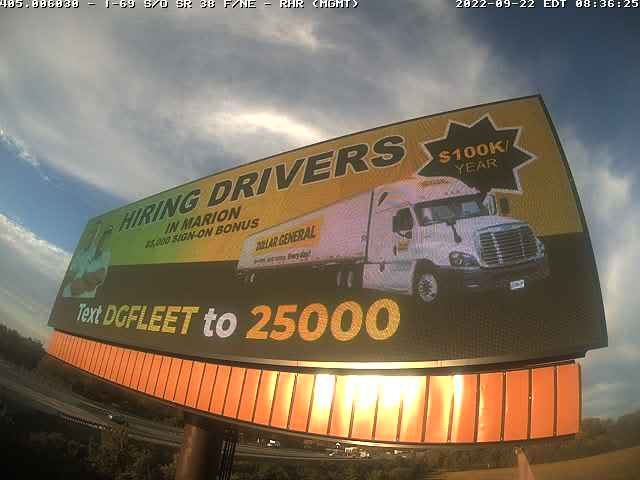

- Digital Outdoor Media Ads – Billboard messaging, color contrasts, font styles, call-to-action phrasing

- Social Media – Post formats, captions, video thumbnails, hashtags, engagement strategies

- Timing & Delivery – Best times to send emails, publish posts, or run ads

The key is to test one change at a time—otherwise, it’s hard to know what actually made the difference.

How Do You Run an A/B Test?

A/B testing is all about structure. If you don’t set up the test properly, change too many things at once, or cut the test off early, you might get misleading results. Here’s how to run an effective A/B test without wasting precious time or budget:

Step 1: Figure Out What’s Not Working

Before making changes, figure out what’s not working. Are email open rates low? Is your landing page getting traffic but no conversions? Are people clicking an ad but not making a purchase?

For example, if a billboard campaign isn’t driving store visits, the issue might be unclear messaging or an ineffective CTA. That’s your starting point.

Step 2: Analyze the Data

Don’t just guess—check the numbers.

If website visitors drop off before completing a form, is the form too long? If an email has low click-through rates, is the CTA buried too deep? If a digital out-of-home ad isn’t converting, is the text too small to read from a distance?

Good data makes for good testing.

Step 3: Formulate a Hypothesis

Once you’ve identified the issue, form a hypothesis: If I change X, then Y will improve.

For instance:

- If we shorten the email subject line, then more users will open it.

- If we use bolder fonts on our display ad, then more viewers will recall the message.

- If we move the CTA button higher on the page, then more people will see and click it.

The clearer your hypothesis, the more reliable your test results will be.

Step 4: Create Two Test Versions

Have you developed your hypothesis? If so, you’re ready to build two test versions:

- Version A (Control): The current design or content.

- Version B (Test): The same thing with one strategic change.

Remember, don’t change multiple things at once. If you’re testing a web page, tweak just the headline or the CTA placement—not both—so you know what caused any improvement.

Step 5: Run the Test and Gather Data

Now it’s time to let your test run. But be patient—stopping it too soon can lead to misleading results.

For digital campaigns, A/B testing tools will split the audience evenly between two versions of an ad, ensuring each variation gets a fair share of views. For traditional billboards or print ads, you might compare performance in different locations or time frames. In fact, you can easily track impressions on billboards through things like traffic data.

Timing also matters. If you’re testing email subject lines, don’t just check results after an hour. Let the test run for at least 24-48 hours to account for different user behaviors throughout the day. The same goes for outdoor media. A traditional or digital billboard that gets great engagement during the morning rush hour might not perform as well in the evening.

Step 6: Analyze Results

Once you have the data, compare the results. Did Version B outperform the original? If so, roll it out. If not, test another variable. If the results are inconclusive, adjust another variable and test again. The goal is to keep refining until you get the best possible results.

Example: Your new email design with the CTA button above the fold increased clicks by 10%. Not bad! But your boss wonders if an even bolder CTA could work better. Time for another test.

Step 7: Keep A/B Testing Future Marketing Campaigns

A/B testing isn’t a one-time thing.

Just because one version performs better now doesn’t mean it always will. Consumer behavior shifts, trends change, and competition evolves. That’s why continuous testing is key. Keep refining, keep testing, and keep adjusting your strategy based on what your audience responds to right now.

Tips for Running A/B Tests

A/B testing is powerful—but only if it’s done right. Sloppy execution can lead to misleading results and wasted time. You might think you have a foolproof plan, but without the right approach, your test could be telling you the wrong story.

Here’s what to keep in mind:

Don’t Make Changes Mid-Test

Once a test is live, hands off the wheel. Resist the urge to tweak anything before it’s complete. Changing things halfway through—changing the CTA, shifting targeting, or even adjusting colors—completely skews results.

Let the test run its course, gather clean data, and then analyze what worked (or didn’t).

Use a Large Enough Sample Size

Testing with an audience that’s too small is like judging a restaurant’s popularity based on the first few customers who walk in. Just because the first three people order the same dish doesn’t mean it’s the best one on the menu. You need a lot more data to see real trends. The fewer people in your test, the higher the chance your results are just based on sheer luck, not real patterns.

To get statistically significant data, use A/B testing tools that calculate whether your sample size is large enough. Otherwise, you’re just making guesses with extra steps.

Let the Test Run Long Enough

Don’t rush the process. Let your test run long enough to gather reliable data, considering factors like seasonal trends, behavior differences, or daily traffic fluctuations.

A good rule of thumb?

For ads that generate quick feedback, like emails or social media ads, run your test for at least 24-48 hours to spot early trends. For things like major website changes, wait up to a week to get more reliable insights.

What Are Some A/B Testing Mistakes?

A/B testing is only as good as how it’s set up. Even small mistakes can lead to wasted resources or even penalties from Google. Be sure that you’re avoiding mistakes such as:

Cloaking

Cloaking is the practice of showing search engines different content to search engine crawlers than to human users in order to manipulate rankings, which violates search engine guidelines.

Google heavily penalizes websites that engage in cloaking. This can happen in A/B testing when a business presents a different version of a page while users see another. To avoid this, always ensure that both users and search engines see the same content. A/B testing should enhance the user experience, not manipulate rankings.

Confusing A/B Testing With Multivariate Testing

If you tweak the headline, CTA, and image all at the same time, you’ll never know which one actually moved the needle. Stick to one variable per test.

When you start testing multiple elements at the same time, it’s no longer considered A/B testing, it becomes “multivariate testing.” This method analyzes different combinations (e.g., CTA + headline, image + CTA) to find the best mix. While powerful, it requires a much more complex setup and a larger sample size to produce reliable results.

For most businesses—especially those new to optimization—A/B testing is the way to go. It’s faster, simpler, and gives you actionable insights without the extra hassle.

Declaring a Winner Too Soon

Does one version look better after a day? That doesn’t mean it’ll hold up long-term. Give the test time to even out. Version B may look like it’s eking out a victory early, but without enough time, those results could be nothing more than a short-term fluctuation rather than a real performance trend.

Use a statistical significance calculator if you really want to determine if your test results are meaningful before making changes.

Copying Someone Else’s Success

Just because a specific ad or webpage skyrocketed conversions for another brand doesn’t mean it’ll work for yours. Every audience is different—test what works for you. What might work with one demographic may not work with another, so always rely on your own data rather than assuming success will carry over to your market.

Your Message Matters—Let’s Make It Stick

Conducting A/B testing isn’t just a “nice-to-have.” Instead, it’s how brands make sure their marketing actually works.

At Alluvit Media, we specialize in out-of-home (OOH) advertising, and A/B testing is at the core of our strategy. With our digital billboards, you can swap out creative messaging and designs mid-campaign, getting real-time feedback on what resonates most. No more waiting weeks to analyze performance—you can test, adjust, and refine on the fly.

Plus, when you partner with us, you can rest easy knowing that we offer no-cost, in-house graphic design services, whether it’s for digital or traditional media.

Take the guesswork out of your next marketing campaign with a team that knows the OOH industry. Fill out our request for proposal form today!